At TWOSENSE.AI, our team builds enterprise products for continuous biometric authentication where the Machine Learning (ML) is the backbone of almost every feature. If you missed it, we already dug into why we need to run our ML team with an Agile Software Engineering (SWE) process, as well as what our SWE process looks like and why.

One of our core operating principles at TWOSENSE.AI is “don’t reinvent the wheel,” so when we realized we needed an agile process for ML engineering (MLE), the first thing we did is go looking for what we could learn from others.

We didn’t find exactly what we’re looking for. But, we did find a lot of smart content that means we don’t have to start from scratch. We’d like to share those findings with you here. This is a long one, there’s a TL;DR in the conclusion at the bottom.

MATCHING SWE PARADIGMS IN DS

Early stabs at Agile processes for DS tried to find analogous representations of SWE components as they apply to DS. For example, looking at experiment design as a replacement for test-driven development and iterative coding, using a phase of analysis to replace a retro, and show and tell instead of code review. [Side note: read the comments section of that post to see some of the arguments against mixing Agile and DS.]

There’s a book from ‘13 by Russel Jurney on the topic, that looks at the infrastructure and different tools for DS. Much of the early content from that time focuses on technology, e.g. platforms, libraries, pipelines, etc. From our point of view, this is helpful but a little premature and puts the cart before the horse. At TWOSENSE.AI we think you should start with the process first, and then find the technology that best supports that process.

A clear problem with the defacto waterfall approach to DS process is that it drifts out of alignement with changing business goals over the lifetime of a project. There is an emphasis on using the paradigm of sprints, epics, and stories to periodically re-evaluate that alignment with a show-and-tell atmosphere. Another big theme here is that Agile is as much a way of thinking as it is a set of steps or a codified process. That way of thinking is about taking something big and ambitious and breaking it down into smaller steps that can be shown, and resynced with external stakeholders and business goals.

This is summarized in a more recent manifesto for agile data science [RECOMMENDED READ] by Jurney again where he introduces the concept of making the result of every sprint be shippable, just like what we know from Agile SWE. This avoids the “death loop” of endless internal iterations as the DS process readjusts to changing conditions. The literature to this point feels a bit short of a real Agile process, but we can already see the first inklings of one: Agile in DS is a way of thinking about big problems and breaking them into small pieces that are realigned with changing priorities and shipping those iterations.

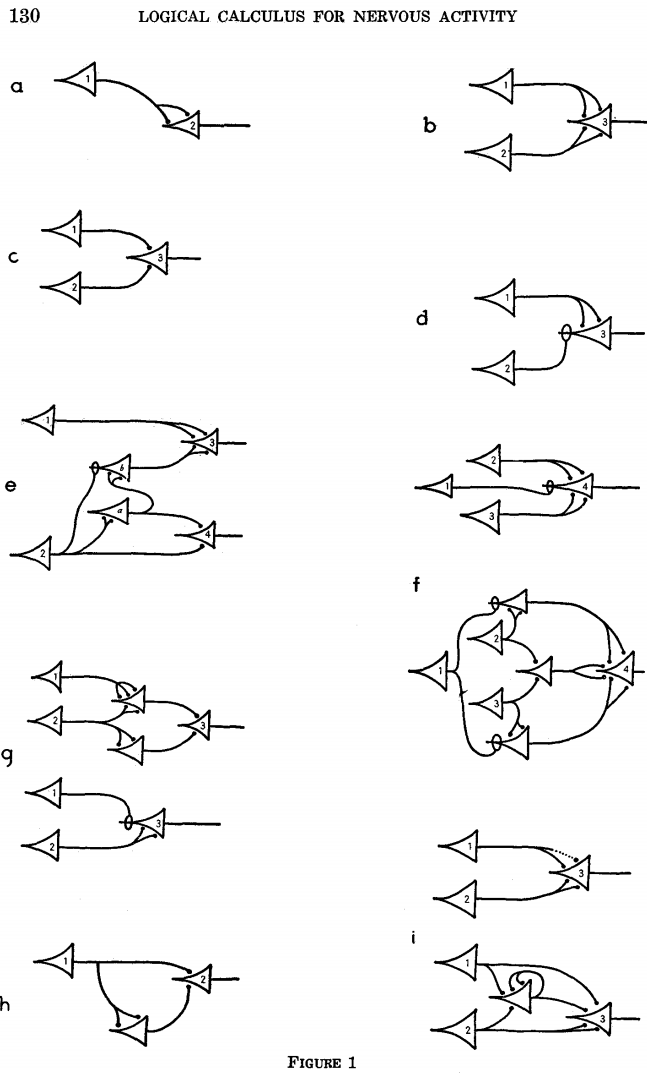

From: McCulloch and Pitts 1943. We’ve come a long way since the first hand-drawn Artificial Neural Networks, but using what we’ve learned to build real-world solutions is still not straight-forward.

SEPARATING DATA SCIENTISTS AND SOFTWARE ENGINEERS

Some organizations chose to leave the DS process alone and looked for paradigm analogies, like using demo as the DS-analogous operator to replace code review of SWE. They combined this with changing the way they think about problem-solving. However, the inherent issues of applying Agile SWE rigor to data science have been skirted. We know that the major argument for leaving DS as a waterfall approach is that it’s inherently a holistic process which can’t, or shouldn’t be broken down. Several authors take the pragmatic approach of breaking up the process into research and engineering and applying Agile to the latter while leaving the former as a waterfall. Here we see the first references towards making the process scalable, i.e. allow more people to be added to a task to speed it up, which is a great goal. Specifically, these recommend a heterogeneous team consisting of data scientists, data engineers, and software engineers. The argument, again pragmatic, is that it is nearly impossible to find engineers capable of both performing in both research and SWE roles. Pragmatism is always the right way to go for a startup, but a chain is only as strong its weakest link. Having a process by which product engineers need to wait on a waterfall DS task to complete before we can start incorporating results into the product leaves us feeling uneasy at TWOSENSE.AI.

AGILE PROCESSES FROM DATA SCIENCE

There are some great pieces shared from the trenches of early attempts. One of the first pieces of learning is to keep individual DS tasks focused on a scope by the type of work that’s being done, e.g. literature review, data exploration, algorithm development, result analysis, review, and deployment phases for each sprint or story [RECOMMENDED READ]. This is a great piece of insight because it shows us their approach to actually breaking down a big thing into smaller steps and tasks as it relates to DS. We also see the concept of skipping or reassessing the remaining tickets based on what we learn during execution. This concept is so exciting because it addresses one of the largest concerns of Agile ML critics: you don’t know what you don’t know. The big takeaway here is that you need to be continuously adjusting throughout the sprint based on what you’re learning. This goes against mainstream Agile SWE wisdom since it makes projects more difficult to plan. However, it’s a pragmatic approach to planning yet incorporating the intrinsic component of not knowing what you don’t know. Trying to enforce strict adherence to the plan in the face of new understandings feels to me like it would subtract from focus on the sprint goals, like dogma instead of pragmatism.

With this dynamic process, it's important to stay on focus and make each ticket or task something with a tangible benefit. A great piece [RECOMMENDED READ] articulates the iterative nature of this process, starting off with a baseline and iteratively improving it with each task or ticket. With a dynamic process that includes many unknowns and iterative improvements, it is also dangerous to be overly focused on story points [RECOMMENDED READ]. Doing so here can encourage gamification of the process where engineers optimize for the human performance metrics at the cost of ML performance. It’s important to evaluate the benefit of a small iteration, and the context switching cost of picking this back up later at another iteration. The same could easily be said about Agile SWE as well, however, the difference here is that DS/ML tasks are about answering a question far more often. One of the ways to address this is to acknowledge that there are some jobs that just don’t have deliverable code results [RECOMMEND READ] that should still be reviewed but to have these be shared as show-and-tell or demos.

FROM AGILE DATA SCIENCE TO AGILE MACHINE LEARNING

So far, we’ve looked at Agile DS and gotten as much as possible from the literature that seems relevant to MLE as well. One thing to keep in mind is that the vast majority of what we’ve been speaking about thus far was designed for very long timelines. The article above was speaking about sprints on a timeline of about 1 year (!!), so while many of the lessons learned are still relevant, there may be quite a bit further yet to go to get things in line with a 2-week sprint. Furthermore, they’re looking at DS, which is not the same as MLE. DS is about finding questions the data can answer and answers to those questions where the consumer is most often a human. A DS sprint is therefore focused on answering a question. MLE is about starting with a problem independent of the data and building an ML solution to it, where the consumer is usually a piece of software or an actuator of some kind.

AGILE FOR MACHINE LEARNING ENGINEERING

When we dive into what’s been published on Agile for MLE, the literature is much thinner. A company called YellowRoad (I’m not sure what they do exactly, but I’m sure they do it really well!) has put out some great content. They propose starting off with quick solutions over optimal ones [RECOMMENDED READ] as a way to make things iterative yet shippable. Google famously “blur the line between research and engineering activities” and say just about the same thing. <CONJECTURE> There is a vast chasm between knowing what the best solution is and implementing that in a product. Bridging that chasm is the hardest part, not just in DS/ML, but in startups, art, everything: it’s called “execution.” Trying to find a process that closes that gap and unifies research and product dev is really hard, but you only have to invest that effort once. It’s what we’re trying to accomplish with all of this here. Having to bridge that gap every quarter, every sprint, or even every ticket feels like an ongoing cost that will be fatal for a startup’s execution. I think that this is also what Google discovered, and why they’ve made the decisions that they have.</CONJECTURE>

Google also says [RECOMMENDED READ] (Rule #4) that it’s better to start with a bare-bones model (we call it a skeleton) and focus on building the infrastructure first, then building out the ML iteratively once that’s in place. They even go a step further (Rule #1) and say try and start off with an expert system as your baseline to focus on the infrastructure alone before you even get into ML at all. Those rules are fantastic, I recommend at least scanning all 37. On top of that, they have a great paper on ML technical debt [RECOMMENDED READ] that while slightly off-topic here, will greatly help anyone on the same journey with us. It also concludes by saying that even if your team isn’t made up of full-stack ML engineers, these processes and the technical debt affects both SWEs and DSs, and there needs to be buy-in from both sides and tight integration between them.

In a recent conversation with Sven Strohband of Khosla, Sven stated that every ML/AI company he’s worked with that was able to execute successfully followed a “Challenger Champion” approach to MLE. This is a concept that stems from the early days of financial modeling (as far as I can tell), where an incumbent system (the Champion) is A/B tested against a proposed improvement. I love this concept because when combined with rigorous evaluation metrics, it creates a form of end-to-end testing where the metrics are the test. It also creates a Kaizen culture, which is one of my favorite concepts, where small changes that improve the whole are adopted over time.

CONCLUSION

Before designing our process, we read everything we could find on the topic. Here’s what we took away.

The TL;DR is this. Agile is a way of thinking more than anything else as it applies to Data Science and Machine Learning. It’s about creating a process that breaks big things down into small tasks, continually readjusts those tasks for changing requirements, and ships as often as the product does. Make it scalable starting off with the bare minimum to touch all components, and iterating from there on the different pieces. Try to beat the performance of the incumbent system with your “challenger” improvement by measuring against performance metrics that are relevant to you. Watch out for overly focusing on story points along the way, and use demos to show non-shippable results. Finally, if you want to work in an Agile way, you’re going to need a process that combines Research and Software Engineering efforts into Agile Machine Learning Engineering.